Electricity consumption in data centers worldwide doubled between 2000 and 2005, but the pace of growth slowed between 2005 and 2010. This slowdown was the result of the 2008 economic crisis, the increasing use of virtualization in datacenters, and the industry's efforts to improve energy efficiency. However, the electricity consumed by datacenters globally in 2010 amounted to 1.3% of the world electricity use. Power consumption is now a major concern in the design and implementation of modern infrastructures because energy-related costs have become an important component of the total cost of ownership of this class of systems.

Thus, energy management is now a central issue for servers and datacenter operations, focusing on reducing all energy-related costs, such as investment, operating expenses and environmental impacts. The improvement of energy efficiency is a major problem in cloud computing because it has been calculated that the cost of powering and cooling a datacenter accounts for 53% of its total operational expenditure. But the pressure to provide services without any failure leads to a continued scaling systems for all levels of the power hierarchy, from the primary feed sources to the support. In order to cover the worst-case situations, it is normal to over-provision Power Distribution Units (PDUs), Uninterrupted Power Supply (UPS) units, etc. For example, it has been estimated that power over-provisioning in Google data centers is about 40%.

Furthermore, in an attempt to ensure the redundancy of power systems, banks of diesel generators are kept running permanently to ensure that the system does not fail even the moments that these support systems would take to boot up. These giant generators work continuously to ensure high availability in the event of a failure of any critical system, emitting large quantities of diesel exhaust, i.e., pollution. Thus, it is estimated that only about 9% of the energy consumed by datacenters is in fact used in computing operations, everything else is basically wasted to keep the servers ready to respond to any unforeseen power failure.

When we connect to the Internet, cyberspace can resemble a lot to outer space in the sense that it seems infinite and ethereal; the information is just out there. But if we think about the energy of the real world and the physical space occupied by the Internet, we will begin to understand that things are not so simple. Cyberspace has indeed real expression in the physical space, and the longer it takes to change our behavior in relation to the Internet, in order to clearly see its physical characteristics, the closer we will be to enter a path of destruction of our planet.

Previous Post – Next Post

Cyberspace's Social Impact

Despite being fashionable and many people refer to it, only a few seem to know what the "cloud" really is. A recent study by Wakefield Research for Citrix, shows that there is a huge difference between what U.S. citizens do and what they say when it comes to cloud computing. The survey of more than 1,000 American adults was conducted in August 2012 and showed that few average Americans know what cloud computing is.

For example, when asked what "the cloud" is, a majority responded it's either an actual cloud, the sky or something related to the weather (29%). 51 percent of respondents, believe stormy weather can interfere with cloud computing and only 16% were able to link the term with the notion of a computer network to store, access and share data from Internet-connected devices. Besides, 54% of respondents claimed to have never used a cloud when in fact 95% of those who said so are actually using cloud services today via online shopping, banking, social networking and file sharing.

What these results suggest is that the cloud is indeed transparent to users, fulfilling one of its main functions, which is provide content and services easily and immediately. However, the lack of knowledge about the computing model that supports all of our everyday activities, leads to a growing disengagement with the consequent deterioration of the security concerns of content and privacy.

In reality, cyberspace is not an aseptic place filled only with accurate and useful information. The great interest of cyberspace lies precisely in that it allows for social vitality, based on a growing range of multimedia services. Its fascination comes from acting as a booster technology for the proliferation of all forms of sociability, being a connectivity instrument. Therefore, cyberspace is not a purely cybernetic thing, but a living, chaotic, and uncontrolled entity.

Beyond these concerns, others equally serious are emerging. By analyzing our daily use of these new technological tools, we conclude that the growth of the Internet is suffocating the planet. We have to face the CO2 emissions produced by our online activities as internal costs to the planet.

We can start by showing some awareness of the problem, restricting our uploads and even removing some. Why not? What about reducing our photos on Facebook and Instagram? Keeping them permanently available consumes energy! If no one cares about our videos on YouTube, why not delete them? At least keep them where they do not need to be consuming energy.

We still have to go further and think that if awareness and self-discipline are not enough, we must consider the possibility of a cost for the sharing of large volumes of personal information. It is perhaps the only way to get most people to stop making unconscious use of the cloud, clogging it by dumping huge amounts of useless information into cyberspace. The goal is not to limit the access to information, this should always be open access, but rather give it a proper and conscientious use.

Next Post

For example, when asked what "the cloud" is, a majority responded it's either an actual cloud, the sky or something related to the weather (29%). 51 percent of respondents, believe stormy weather can interfere with cloud computing and only 16% were able to link the term with the notion of a computer network to store, access and share data from Internet-connected devices. Besides, 54% of respondents claimed to have never used a cloud when in fact 95% of those who said so are actually using cloud services today via online shopping, banking, social networking and file sharing.

What these results suggest is that the cloud is indeed transparent to users, fulfilling one of its main functions, which is provide content and services easily and immediately. However, the lack of knowledge about the computing model that supports all of our everyday activities, leads to a growing disengagement with the consequent deterioration of the security concerns of content and privacy.

In reality, cyberspace is not an aseptic place filled only with accurate and useful information. The great interest of cyberspace lies precisely in that it allows for social vitality, based on a growing range of multimedia services. Its fascination comes from acting as a booster technology for the proliferation of all forms of sociability, being a connectivity instrument. Therefore, cyberspace is not a purely cybernetic thing, but a living, chaotic, and uncontrolled entity.

Beyond these concerns, others equally serious are emerging. By analyzing our daily use of these new technological tools, we conclude that the growth of the Internet is suffocating the planet. We have to face the CO2 emissions produced by our online activities as internal costs to the planet.

We can start by showing some awareness of the problem, restricting our uploads and even removing some. Why not? What about reducing our photos on Facebook and Instagram? Keeping them permanently available consumes energy! If no one cares about our videos on YouTube, why not delete them? At least keep them where they do not need to be consuming energy.

We still have to go further and think that if awareness and self-discipline are not enough, we must consider the possibility of a cost for the sharing of large volumes of personal information. It is perhaps the only way to get most people to stop making unconscious use of the cloud, clogging it by dumping huge amounts of useless information into cyberspace. The goal is not to limit the access to information, this should always be open access, but rather give it a proper and conscientious use.

Next Post

The Evolution of Computing: Cloud Computing

Cloud computing has recently emerged as a new paradigm for hosting and delivering services over the Internet. Cloud computing is attractive to business owners as it eliminates the requirement for users to plan ahead for provisioning, and allows enterprises to start from the small and increase resources only when there is a rise in service demand. Cloud computing is first and foremost a concept of distributed resource management and utilization. It aims at providing convenient endpoint access system while not requiring purchase of software, platform or physical network infrastructure, instead outsourcing them from third parties.

The arrangement may beneficially influence competitive advantage and flexibility but it also brings about various challenges, namely privacy and security. In cloud computing, applications, computing and storage resources live somewhere in the network, or cloud. User’s don’t worry about the location and can rapidly access as much or as little of the computing, storage and networking capacity as they wish—paying for it by how much they use—just as they would with water or electricity services provided by utility companies. The cloud is currently based on disjointedly operating data centers but the idea of a unifying platform not unlike the Internet has already been proposed.

In a cloud computing environment, the traditional role of service provider is divided into two: the infrastructure providers who manage cloud platforms and lease resources according to a usage-based pricing model, and service providers, who rent resources from one or many infrastructure providers to serve the end users. Cloud computing providers offer their services according to several fundamental models: software as a service, infrastructure as a service, platform as a service, desktop as a service, and more recently, backend as a service.

The backend as a service computing model, also known as "mobile backend as a service" is a relatively recent development in cloud computing, with most commercial services dating from 2011. This is a model for providing web and mobile applications developers with a way to link their applications to backend cloud storage while also providing features such as user management, push notifications, and integration with social networking services. These services are provided via the use of custom software development kits (SDKs) and application programming interfaces (APIs). Although similar to other cloud-computing developer tools, this model is distinct from these other services in that it specifically addresses the cloud-computing needs of web and mobile applications developers by providing a unified means of connecting their apps to cloud services. The global market for this services has an estimated value of hundreds of million dollars in the next years.

Clearly, public cloud computing is at an early stage in its evolution. However, all of the companies offering public cloud computing services have data centers, in fact, they are building some of the largest data centers in the world. They all have network architectures that demand flexibility, scalability, low operating cost, and high availability. They are built on top of products and technologies supplied by Brocade and others network vendors. These public cloud companies are building business on data center designs that virtualize computing, storage, and network equipment—which is the foundation of their IT investment. Cloud computing over the Internet is commonly called “public cloud computing.” When used in the data center, it is commonly called “private cloud computing.” The difference lies in who maintains control and responsibility for servers, storage, and networking infrastructure and ensures that application service levels are met. In public cloud computing, some or all aspects of operations and management are handled by a third party “as a service.” Users can access an application or computing and storage using the Internet and the HTTP address of the service.

Previous Post

The Evolution of Computing: Virtualization

Countless PCs in organizations effectively killed the need for virtualization as a multi-tasking enabled solution in the 1980s. At that time, virtualization was widely abandoned and not picked up until the late 1990s again, when the technology would find a new use and purpose. The opportunity of a booming PC and datacenter industry brought an unprecedented increase in the need for computer space, as well as in the cost of power to support these installations. Back in 2002, data centers already accounted for 1.5 percent of the total U.S. power consumption and was growing by an estimated 10 percent every year. More than 5 million new servers were deployed every year and added a power supply of thousands of new homes every year. As experts warned of excessive power usage, hardware makers began focusing on more power efficient components to enable growth for the future and alleviate the need for data center cooling. Data center owners began developing smart design approaches to make the cooling and airflow in data centers more efficient.

At this time, most computing was supported by the highly inefficient x86-based IT model, originally created by Intel in 1978. Cheap hardware created the habit of over-provisioning and under-utilizing. Any time a new application was needed, it often required multiple systems for development and production use. Take this concept and multiply it out by a few servers in a multi-tier application, and it wasn't uncommon to see 8-10 new servers ordered for every application that was required. Most of these servers went highly underutilized since their existence was based on a non-regular testing schedule. It also often took a relatively intensive application to even put a dent in the total utilization capacity of a production server.

In 1998, VMware solves the problem of virtualizing the old x86 architecture opening a path to a solution to get control over the wasteful nature of IT data centers. This server consolidation effort is what helped establish virtualization as a go-to technology for organizations of all sizes. IT started to notice capital expenditure savings by buying fewer, but higher powered servers to handle the workloads of 15-20 physical servers. Operational expenditure savings was accomplished through reduced power consumption required for powering and cooling servers. It was the realization that virtualization provided a platform for simplified availability and recoverability. Virtualization offered a more responsive and sustainable IT infrastructure that afforded new opportunities to either keep critical workloads running, or recover them more quickly than ever in the event of a more catastrophic failure.

Previous Post – Next Post

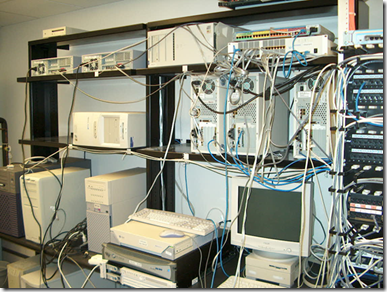

The Evolution of Computing: The Internet Datacenter

The boom of datacenters and datacenter hosting came during the dot-com era. Countless businesses needed nonstop operation and fast Internet connectivity to deploy systems and establish a presence on the Web. Installing data center hosting equipment was not a viable option for smaller companies. As the dot com bubble grew, companies began to understand the importance of having an Internet presence. Establishing this presence required that companies have fast and reliable Internet connectivity. They also had to have the capability to operate 24 hours a day in order to deploy new systems.

Soon, these new requirements resulted in the construction of extremely large data facilities, responsible for the operation of computer systems within a company and the deployment of new systems. However, not all companies could afford to operate a huge datacenter. The physical space, equipment requirements, and highly-trained staff made these large datacenters extremely expensive and sometimes impractical. In order to respond to this demand, many companies began building large facilities, called Internet Datacenters, which provided businesses of all sizes with a wide range of solutions for operations and system deployment.

New technologies and practices were designed and implemented to handle the operation requirements and scale of such large-scale operations. These large datacenters revolutionized technologies and operating practices within the industry. Private datacenters were born out of this need for an affordable Internet datacenter solution. Today's private datacenters allow small businesses to have access to the benefits of the large Internet data centers without the expense of upkeep and the sacrifice of valuable physical space.

Previous Post – Next Post

The Evolution of Computing: Distributed Computing

After the microcomputers, came the world of distributed systems. One important characteristic of the distributed computing environment was that all of the major OSs were available on small, low-cost servers. This feature meant that it was easy for various departments or any other corporate group to purchase servers outside the control of the traditional, centralized IT environment. As a result, applications often just appeared without following any of the standard development processes. Engineers programmed applications on their desktop workstations and used them for what later proved to be mission-critical or revenue-sensitive purposes. As they shared applications with others in their departments, their workstations became servers that served many people within the organization.

In the distributed computing environment, it was common for applications to be developed following a one-application-to-one-server model. Because funding for application development comes from vertical business units, and they insist on having their applications on their own servers, each time an application is put into production, another server is added. The problem created by this approach is significant because the one-application-to-one-server model is really a misnomer. In reality, each new application generally requires the addition of at least three new servers, and often requires more as follows: development servers, test servers, training servers and cluster and disaster recovery servers.

Therefore, it became standard procedure in big corporations to purchase 8 or 10 servers for every new application being deployed. It was the prelude for the enormous bubble that ultimately would cause the collapse of many organization who thought cyberspace was an easy and limitless way to make money.

Previous Post - Next Post

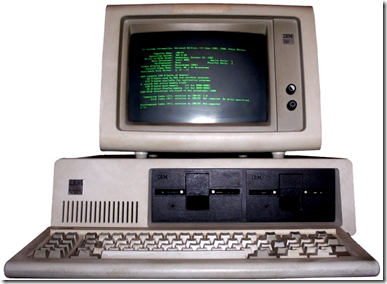

The Evolution of Computing: Personal Computing

Initially, companies developed applications on minicomputers because it gave them more freedom than they had in the mainframe environment. The rules and processes used in this environment were typically more flexible than those in the mainframe environment, giving developers freedom to be more creative when writing applications. In many ways, minis were the first step towards freedom from mainframe computing. However, with each computer being managed the way its owner chose to manage it, a lack of accepted policies and procedures often led to a somewhat chaotic environment. Further, because each mini vendor had its own proprietary OS, programs written for one vendor's mini were difficult to port to another mini. In most cases, changing vendors meant rewriting applications for the new OS. This lack of application portability was a major factor in the demise of the mini.

During the 1980s, the computer industry experienced the boom of the microcomputer era. In the excitement accompanying this boom, computers were installed everywhere, and little thought was given to the specific environmental and operating requirements of the machines. From this point on, computing that was previously done in terminals that served only to interact with the mainframe — the so called “stupid terminals”— shall be made on personal computers, or machines that have their own resources. This new computing model was the embryo of modern cyberspace with all the services that we know today.

The Evolution of Computing: The Mainframe Era

Modern datacenters have their origins in the huge computer rooms of the early computing industry. Old computers required an enormous amount of power and had to constantly be cooled to avoid overheating. In addition, security was of great importance because computers were extremely expensive and commonly used for military purposes, so basic guidelines for controlling access to computer rooms were devised.

The Evolution of Computing: Overview

In our time, cyberspace is an integral part of the lives of many millions of citizens around the world that dive in it for work or just for fun. Our daily life is now occupied by a plethora of user-friendly technology that allow us to have more time for other activities, increase our productivity and have a lot more access to all kinds of information. But it was not always so, and until we reach this stage we went through about 50 years of development. This series of articles will summarize the evolution of different computing models that underpin much of modern life and discuss some of the future trends that will certainly change the way we relate to information technology and interact with each other.

In recent decades, computer technology has undergone a revolution that catapulted us to a growing complexity of effects revealed in a new society and, from a certain point, we started to take for granted the use of all the technology at our disposal, without thinking about the future consequences of our actions. Therefore, amongst all that we take today for granted, cyberspace is near the top of the list. The promise of the Internet for the twenty-first century is to provide everything everywhere, anytime and anywhere. All human achievements, all culture, all the news will be within reach with just one simple mouse click. The history of computers and cyberspace is critical to understanding the contemporary communication and although they do not constitute the only element of communication in the second half of the twentieth century, they must, by virtue of its importance, come first in any credible historical analysis since they were handed a huge set of tasks that go well beyond the realm of communication.

For many internet users, the access to this virtual world is a sure thing but for many others it does not even exist. Despite its exponential growth and its geographical dispersion, the physical distribution of communications networks is still far from being uniform in all regions of the planet. Moreover, the widespread of mobile telecommunications gives cyberspace a character of uniformity which permits an almost complete abstraction of its physical support. The last few years have been a truly explosive growth phase in information technology, particularly the Internet. Following this expansion, the term cyberspace has become commonly used to describe a virtual world that Internet users inhabit when they are online, accessing the most diverse content, playing games or using widely varying interactive services that the Internet provides. But it is crucial to distinguish cyberspace from telematics networks, because there is a widespread conceptual confusion.

Telematics produces distance communication via computer and telecommunications, while cyberspace is a virtual environment that relies on these media to establish virtual relationships. Thus, I believe the Internet, while being the main global telematics network, does not represent the entire cyberspace because this is something larger that can arise from man's relationship with other technologies such as GPS, biometric sensors and surveillance cameras. In reality, cyberspace can be seen as a new dimension of society where social relationships networks are redefined through new flows of information.

We can visit a distant museum in the comfort of our home, or access any news of a newspaper published thousands of miles away, with a simple mouse click on our computer. Thus, it becomes necessary to think about a regulation of this area in the sake of the common good of the planet. The economy of cyberspace has no mechanism of self-regulation that limits its growth so the current key issues for business are getting cheap energy and keep the transmission times in milliseconds. Revenues from services like Facebook and YouTube are not derived from costs to users so, from the user's point of view, cyberspace is free and infinite. As long as people don't feel any cost in cyberspace usage, they will continue to use it without any restrictions and this is will some become unbearable.

Therefore, the purpose of these articles is to present a brief analysis of the rise and transformations through which these machines and associated technologies have undergone in recent decades, directly affecting the lives of human beings and their work and communication processes.

Next Post

In recent decades, computer technology has undergone a revolution that catapulted us to a growing complexity of effects revealed in a new society and, from a certain point, we started to take for granted the use of all the technology at our disposal, without thinking about the future consequences of our actions. Therefore, amongst all that we take today for granted, cyberspace is near the top of the list. The promise of the Internet for the twenty-first century is to provide everything everywhere, anytime and anywhere. All human achievements, all culture, all the news will be within reach with just one simple mouse click. The history of computers and cyberspace is critical to understanding the contemporary communication and although they do not constitute the only element of communication in the second half of the twentieth century, they must, by virtue of its importance, come first in any credible historical analysis since they were handed a huge set of tasks that go well beyond the realm of communication.

For many internet users, the access to this virtual world is a sure thing but for many others it does not even exist. Despite its exponential growth and its geographical dispersion, the physical distribution of communications networks is still far from being uniform in all regions of the planet. Moreover, the widespread of mobile telecommunications gives cyberspace a character of uniformity which permits an almost complete abstraction of its physical support. The last few years have been a truly explosive growth phase in information technology, particularly the Internet. Following this expansion, the term cyberspace has become commonly used to describe a virtual world that Internet users inhabit when they are online, accessing the most diverse content, playing games or using widely varying interactive services that the Internet provides. But it is crucial to distinguish cyberspace from telematics networks, because there is a widespread conceptual confusion.

Telematics produces distance communication via computer and telecommunications, while cyberspace is a virtual environment that relies on these media to establish virtual relationships. Thus, I believe the Internet, while being the main global telematics network, does not represent the entire cyberspace because this is something larger that can arise from man's relationship with other technologies such as GPS, biometric sensors and surveillance cameras. In reality, cyberspace can be seen as a new dimension of society where social relationships networks are redefined through new flows of information.

We can visit a distant museum in the comfort of our home, or access any news of a newspaper published thousands of miles away, with a simple mouse click on our computer. Thus, it becomes necessary to think about a regulation of this area in the sake of the common good of the planet. The economy of cyberspace has no mechanism of self-regulation that limits its growth so the current key issues for business are getting cheap energy and keep the transmission times in milliseconds. Revenues from services like Facebook and YouTube are not derived from costs to users so, from the user's point of view, cyberspace is free and infinite. As long as people don't feel any cost in cyberspace usage, they will continue to use it without any restrictions and this is will some become unbearable.

Therefore, the purpose of these articles is to present a brief analysis of the rise and transformations through which these machines and associated technologies have undergone in recent decades, directly affecting the lives of human beings and their work and communication processes.

Next Post

Subscribe to:

Posts (Atom)