Clustering Basics

Clustering is the use of multiple computers and redundant interconnections to form what appears to be a single, highly available system. A cluster provides protection against downtime for important applications or services that need to be always available by distributing the workload among several computers in such a way that, in the event of a failure in one system, the service will be available on another.

The basic concept of a cluster is easy to understand; a cluster is two or more systems working in concert to achieve a common goal. Under Windows, two main types of clustering exist: scale-out/availability clusters known as Network Load Balancing (NLB) clusters, and strictly availability-based clusters known as failover clusters. Microsoft also has a variation of Windows called Windows Compute Cluster Server.

When a computer unexpectedly falls or is intentionally taken down, clustering ensures that the processes and services being run switch to another machine, or "failover," in the cluster. This happens without interruption or the need for immediate admin intervention providing a high availability solution, which means that critical data is available at all times.

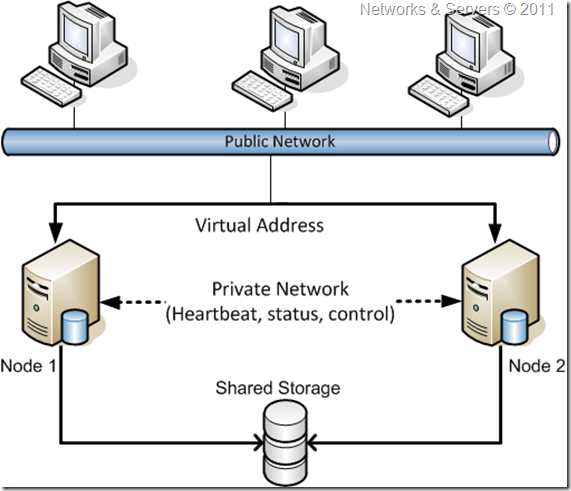

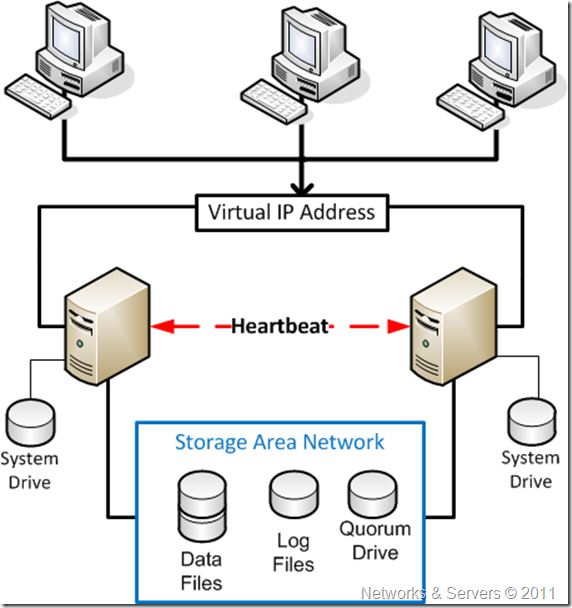

Failover clusters are typically made of two servers (or occasionally several servers) such as the configuration shown in the figure. One server (primary) is actively processing client requests and providing the services in normal situations, while the other server (failover) is monitoring the main server to take over and run the services if the event of a failure.

The primary system is monitored, with active checks every few seconds to ensure that the primary system is operating correctly. When the cluster consists only of two servers, the monitoring can happen on a dedicated cable that interconnects the two machines, or on the network. The system performing the monitoring may be either the failover computer or an independent system (called the cluster controller).

From a client point of view, the application is accessible via a DNS name which in turn maps to a virtual IP address that can float from a machine to another, depending on which machine is active. In the event of the active system failing, or failure of components associated with this system such as network hardware, the monitoring system will detect the failure and the failover system will take over operation of the service.

A key element of the failover clustering solutions is that both computers share a common file system. One approach is to provide this by using a dual ported RAID (Redundant Array of Independent Disks), so that the disk subsystem is not dependent on any single disk drive. An alternative approach is to utilize a SAN (Storage Area Network).

A Failover cluster provides:

- High availability by reducing unplanned downtime and increasing the reliability of services and applications;

- High scalability by allowing administrators to assign up to 16 nodes to one cluster enhancing performance and availability meaning it makes the system more scalable because it allows for incremental growth.

Comparing Clusters

As we have already seen in the previous posts, NLB clusters are primarily meant to provide high availability to services that rely on the TCP/IP protocol working as a load balancer, distributing the load as evenly as possible among multiple computers, each running their own independent, isolated copies of an application, such as IIS. An NLB cluster adds availability as well as scalability to web servers or FTP servers and being a non-Windows concept, load balancing can be achieved via hardware load balancers.

A Windows feature–based NLB implementation is one where multiple servers (up to 32) run independently of one another and do not share any resources thus client requests connect to the farm of servers and can be sent to any of them since they all provide the same functionality. The algorithms behind NLB keep track of which servers are busy, so when a request comes in, it is sent to a server that can handle it. In the event of an individual server failure, NLB knows about the problem and can be configured to automatically redirect the connection to another server in the NLB cluster. NLB cannot be configured on servers that are participating in a failover cluster, so it can only be used with standalone servers.

Failover clusters are probably the most common type of clusters consisting of servers that can handle and trade workloads for stateful applications (the ones that have long-running in-memory state or frequently updated data) such as e-mail, database, file, print and virtualization services across multiple servers.

A Windows failover cluster’s purpose is to help maintain client access to applications and server resources even if the event of some sort of outage (natural disaster, software failure, server failure, etc.). The general idea of availability behind a failover cluster implementation is that client machines and applications do not need to worry about which server in the cluster is actively running a given resource; the name and IP address of whatever is running within the failover cluster is virtualized. This means the application or client connects to a single name or IP address, but behind the scenes, the resource that is being accessed can be running on any server that is part of the cluster.

Trying to compare NLB with Failover Clustering we could say that clustering is the use of multiple computers to provide a single service while Load Balancing is a technique to use multiple computers in a cluster, i.e., a Cluster is an object(s) and Load Balancing is a method.

So, let’s try to compare these two clusters types in Windows Server 2008:

Feature

|

NLB

|

Failover Clustering

|

| Primary usage | Scaling-out workloads, provide some fault tolerance for stateless applications | Increased reliability of software, services and network connections |

| Common workloads | IIS, ISA Server | E-mail, databases, file services, print services, virtualization |

| Failover transparency | Possibly some interruption for clients | In most cases, completely seamless for clients |

| Supported nodes | 32 | 16 |

Cluster Terminology

Failover Cluster

A cluster is group of independent computers, also known as nodes, that are linked together to provide highly available resources for a network. Each node that is a member of the cluster has both its own individual disk storage and access to a common disk subsystem. When one node in the cluster fails, the remaining node or nodes assume responsibility for the resources that the failed node was running. This allows the users to continue to access those resources while the failed node is out of operation.

Node

A server in the failover cluster is known as a node so these are simply the servers which are members of the cluster.

Geocluster

Clusters can be deployed in a server farm in a single physical facility or in different facilities geographically separated for added resiliency. The latter type of cluster is often referred to as a geocluster. Geoclusters became very popular as a tool to implement business continuance because they improve the time that it takes for an application to be brought online after the servers in the primary site become unavailable meaning that ultimately they improve the recovery time objective (RTO).

Cluster networks

The nodes in a cluster communicate over a public and a private network. The public network is used to receive client requests, while the private network is mainly used for monitoring. The nodes monitor the health of each other by exchanging heartbeats on the private network and if this network becomes unavailable, they can use the public network. There can be more than one private network connection for redundancy but most deployments simply use a different VLAN for the private network connection. Alternatively, it is also possible to use a single LAN interface for both public and private connectivity, but this is not recommended for redundancy reasons.

Logical host

The term Logical Host or Cluster Logical Host is used to describe the network address which is used to access services provided by the cluster. This logical host identity is not tied to a single cluster node as it is actually a network address/hostname that is linked with the service(s) provided by the cluster. If a cluster node with a running database goes down, the database will be restarted on another cluster node, and the network address that the users use to access it will point them to the new node so that users can access the database again.

Failover

Failover occurs when a clustered resource fails on one server and another server takes over the management of the resource.

Failback

When the server which dropped out of the cluster returns to service and rejoins the cluster, the services or applications which previously failed over to another node can now return to the server on which they originally ran. This is called failback.

Quorum

The quorum for a cluster can be seen as just the number of nodes that must be online for that cluster to continue running. But there is more to it; for example if all the communication between the nodes in the cluster is lost, both nodes will try to bring the same group online, which results in an active-active scenario. Incoming requests go to both nodes, which then try to write to the shared disk, thus causing data corruption. This is commonly referred to as the split-brain problem.

The mechanism that protects against this problem is the quorum and only one node in the cluster owns the quorum at any given time. The key concept is that when all communication is lost, the node that owns the quorum is the one that can bring resources online, while if partial communication still exists, the node that owns the quorum is the one that can initiate the move of an application group.

Quorum implementation in Windows Server

When a failover cluster is brought online (assuming one node at a time), the first disk brought online is one that will be associated the quorum model deployed. To do this, the failover cluster executes a disk arbitration algorithm to take ownership of that disk on the first node initially making it as offline and then going through a few checks. When the cluster is satisfied that there are no problems with the quorum, it is brought online. The same thing happens with the other disks. After all the disks come online, the Cluster Disk Driver sends periodic reservations every 3 seconds to keep ownership of the disk.

If for some reason the cluster loses communication over all of its networks, the quorum arbitration process begins. The outcome is straightforward: the node that currently owns the reservation on the quorum is the defending node and the other nodes become challengers. When a challenger detects that it cannot communicate, it issues a request to break any existing reservations it owns via a buswide SCSI reset in Windows Server 2003 and persistent reservation in Windows Server 2008. Seven seconds after this reset happens, the challenger attempts to gain control of the quorum and then a few things can happen:

- If the node that already owns the quorum is up and running, it still has the reservation of the quorum disk thus the challenger cannot take ownership and it shuts down the Cluster Service;

- If the node that owns the quorum fails and gives up its reservation, then the challenger can take ownership after 10 seconds elapse. The challenger can reserve the quorum, bring it online, and subsequently take ownership of other resources in the cluster;

- If no node of the cluster can gain ownership of the quorum, the Cluster Service is stopped on all nodes.

Applications

An application running on a server that has clustering software installed does not mean that the application is going to benefit from the clustering. Unless an application is cluster-aware, an application process crashing does not necessarily cause a failover to the process running on the redundant machine. For this to happen you need an application that is cluster-aware and each vendor of cluster software provides immediate support for certain applications.

Resources

Resources are the applications services, or other elements under the control of the Cluster Service. A clustered application has individual resources, such as an IP address, a physical disk, and a network name.

Resource Monitor

Resource monitors check their assigned resources and notify the Cluster Service if there is any change in the resource state.

Resource group

One key concept with clusters is the group. This term refers to a set of dependent resources that are grouped together. Individual resources are contained in a cluster resource group, which is similar to a folder a hard drive that contains files. The group is a unit of failover; in other words, it is the bundling of all the resources that constitute an application, including its IP address, its name, the disks, and so on. A resource group is the smallest unit of failover, individual resources cannot failover. That is, all elements that belong to a single resource group have to exist on a single node.

One example of the grouping of resources could be a “shared folder” application, its IP address, the disk that this application uses, and the network name. If any one of these resources is not available, for example if the disk is not reachable by this server, the group fails over to the redundant machine. The failover of a group from one machine to another one can be automatic, when a key resource in the group fails, or manual.

Dependencies

Some resources need other resources to run successfully, they are a dependency of another resource (or resources) within a group. If a resource is dependent upon another, it will not be able to start until the top-level resource is online. For example, a file share needs a physical disk to hold the data which will be accessed using the share or, in a clustered SQL Server implementation, SQL Server Agent is dependent upon SQL Server to start before it can come online. If SQL Server cannot be started, SQL Server Agent will not be brought online either.

These relationships are known as resource dependencies. When one resource is defined as a dependency for another resource, both resources must be placed in the same group. If a number of resources are ultimately dependent on one resource (for example, one physical disk resource), all of those resources must belong to the same group. Dependencies are used to define how different resources relate to one another. These interdependencies control the sequence in which the Cluster Service brings resources online and takes them offline.

Resource states

Resources can exist in one of five states:

- Offline: the resource is not available for use by any other resource or client;

- Offline Pending: this is a transitional state while the resource is being taken offline;

- Online: the resource is available;

- Online Pending: this is a transitional state while the resource is being brought online;

- Failed: there is a problem with the resource that the Cluster Service cannot resolve.

One can specify the amount of time that Cluster Service allows for specific resources to go online or offline. If the resource cannot be brought online or offline within this time, the resource is placed in the failed state.

Witness

A witness element, either a disk witness or a file share witness, is used only in some clusters, usually the one with an even number of nodes, acting as a tiebreaker when there are failures and the remaining nodes must determine whether enough nodes are currently running to work together as a cluster.

251 comments:

«Oldest ‹Older 401 – 251 of 251Post a Comment