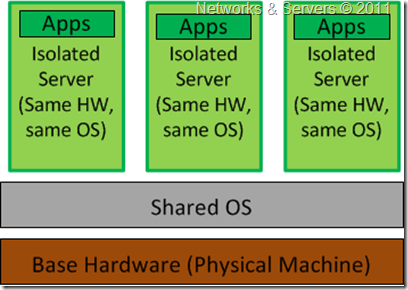

This is probably the most common and most easily explained kind of

server virtualization. When IT departments were struggling to get results with machines at full capacity, it made sense to assign one physical server to every IT function taking advantage of cheap hardware A typical enterprise would have one box for SQL, one for the Apache server and another physical box for the Exchange server. Now, each of those machines could be using only 5% of its full processing potential. This is where hardware emulators come into play in an effort to consolidate those servers.

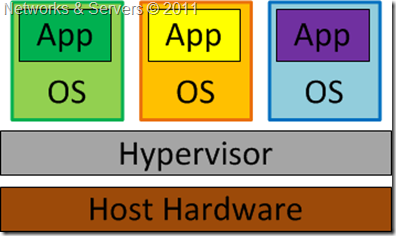

A hardware emulator presents a simulated hardware interface to

guest operating systems. In hardware emulation, the virtualization software (usually referred to as a hypervisor) actually creates an artificial hardware device with everything it needs to run an operating system and presents an emulated hardware environment that guest operating systems operate upon. This emulated hardware environment is typically referred to as a Virtual Machine Monitor or

VMM.

Hardware emulation supports actual guest operating systems; the applications running in each guest operating system are running in truly isolated operating environments. This way, we can have multiple servers running on a single box, each completely independent of the other. The VMM provides the guest OS with a complete emulation of the underlying hardware and for this reason, this kind of virtualization is also referred to as

Full Virtualization.

Thus, full virtualization provides a complete simulation of the underlying hardware and is a technique used to provide support for unmodified guest operating systems. This means that all software, operating systems and applications, which can run natively on the hardware can also be run in the virtual machine.

The term

unmodified refers to operating system kernels which have not been altered to run on a hypervisor and therefore still execute privileged operations as though running in

ring 0 of the CPU. Full virtualization uses the hypervisor to coordinate the CPU of the server and the host machine's system resources in order to manage the running of guest operating systems without any modification. In this scenario, the hypervisor provides CPU emulation to handle and modify privileged and protected CPU operations made by unmodified guest operating system kernels.

The guest operating system makes system calls to the emulated hardware. These calls, which would actually interact with underlying hardware, are

intercepted by the virtualization hypervisor which maps them onto the real underlying hardware. The hypervisor provides complete independence and autonomy of each virtual server to other virtual servers running on the same physical machine. Each guest server has its own operating system and it may even happen that one guest server is running Linux and the other is running Windows.

The hypervisor also monitors and controls the physical server resources, allocating what is needed to each operating system and making sure that the guest operating systems (the virtual machines) cannot disrupt each other. With full virtualization, the guest OS is not aware it is being virtualized and thus requires no modification.

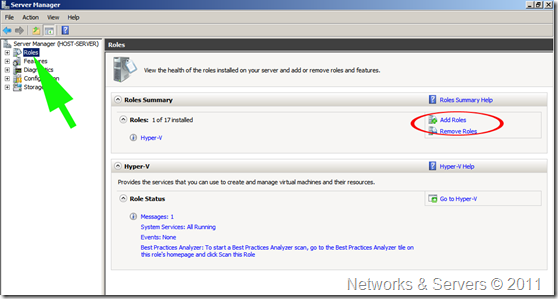

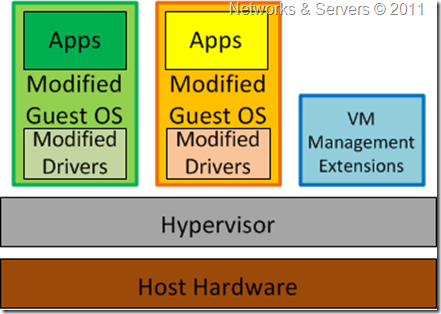

Type 1 Hypervisor

Also known as

Native or

Bare-Metal Virtualization, this is a technique where the abstraction layer sits directly on the hardware and all the other blocks reside on top of it. The Type 1 hypervisor runs directly on the hardware of the host system in ring 0. The task of this hypervisor is to handle resource and memory allocation for the virtual machines in addition to providing interfaces for higher level administration and monitoring tools.The operating systems run on another level above the hypervisor.

Clearly, with the hypervisor occupying ring 0 of the CPU, the kernels for any guest operating systems running on the system must run in less privileged CPU rings. The Type 1 Hypervisor contains functionalities like CPU scheduling and Memory Management and, even though there is no Host OS, usually one of the Virtual Machines has certain privileged status (Control/Admin/Parent VM).

Unfortunately, most operating system kernels are written explicitly to run in ring 0 for the simple reason that they need to perform tasks that are only available in that ring, such as the ability to execute privileged CPU instructions and directly manipulate memory.

Also, depending on the architecture, the hypervisor may either contain the drivers for the hardware resources (referred to as a

Monolithic Hypervisor) or the drivers may be retained at the Guest OS level itself (in which case it can be called a

Microkernelized Hypervisor).

Since it has low level direct access to the hardware, a Type 1 hypervisor is more efficient than a hosted application and delivers greater performance because it uses fewer resources (no separate CPU cycles or memory footprint as in the case of a full-fledged Host OS).

The disadvantage of this model is that there is dependency on the hypervisor for the drivers (at least in case of the Monolithic Hypervisor). Besides, most implementations of the bare-metal approach require specific virtualization support at the hardware level (“

Hardware-assisted”, to be discussed in a future post).

Examples of this architecture are Microsoft Hyper-V and VMware ESX Server.

Note: Microsoft Hyper-V (released in June 2008) exemplifies a Type 1 product that can be mistaken for a Type 2. Both the free stand-alone version and the version that is part of the commercial Windows Server 2008 product use a virtualized Windows Server 2008 parent partition to manage the Type 1 Hyper-V hypervisor. In both cases the Hyper-V hypervisor loads prior to the management operating system, and any virtual environments created run directly on the hypervisor, not via the management operating system.

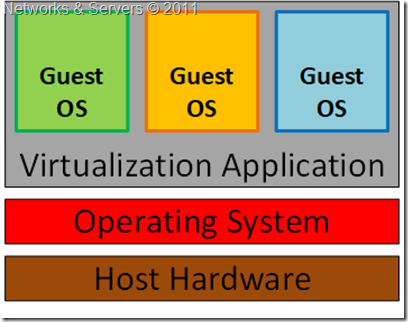

Type 2 Hypervisor

Guest OS Virtualization is perhaps the easiest concept to understand. In this scenario the physical host computer system runs a standard unmodified operating system such as Windows, Linux, Unix or MacOS X, and the virtualization layer runs on top of that OS being in effect a hosted application. In this architecture, the VMM provides each virtual machine with all the services of the physical system, including a virtual BIOS, virtual devices and virtual memory. This has the effect of making the guest system think it is running directly on the system hardware, rather than in a virtual machine within an application.

The OS itself provides the abstraction layer (known as Type 2 Hypervisor), such that it allows other OSes to reside within; thus creating virtual machines. This architecture then can be called as

Hosted Virtualization (since an OS is ‘hosting’ it), as depicted below.

The virtualization layer in the above figure contains the software needed for hosting and managing the virtual machines but its exact functionality can vary based on the different architectures from different vendors. In this approach, the Guest OS relies on the underlying Host OS for access to the hardware resources.

The multiple layers of abstraction between the guest operating systems and the underlying host hardware are not conducive to high levels of virtual machine performance. This technique does, however, have the advantage that no changes are necessary to either host or guest operating systems and no special CPU hardware virtualization support is required.

Examples of a Type 2 Hypervisor are Oracle's VirtualBox, VMware Server, VMware Workstation and Microsoft Virtual PC.

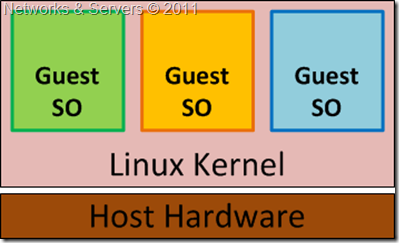

Embedded Hypervisor

In

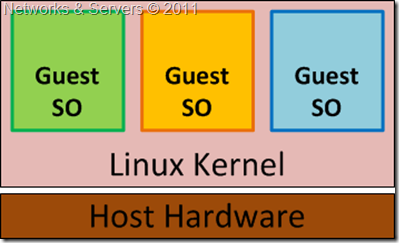

Kernel Level Virtualization the host operating system runs on a specially modified kernel which contains extensions designed to manage and control multiple virtual machines each containing a guest operating system.

The virtualization layer is embedded into an operating system kernel and each guest runs its own kernel, although restrictions apply in that the guest operating systems must have been compiled for the same hardware as the kernel in which they are running.

The real benefit with this approach is that the hypervisor code is dramatically leaner than either Type 1 or Type 2. With the hypervisor embedded into the Linux kernel, the guest operating systems benefit from excellent disk and network I/O performance.

Examples of kernel level virtualization technologies include User Mode Linux (UML) and Kernel-based Virtual Machine (KVM).

Full Virtualization Advantages

- This approach to virtualization means that applications run in a truly isolated guest OS, with one or more of these guest OSs running simultaneously on the same hardware. Not only does this method support multiple OSes, it can support dissimilar OSes, differing in minor ways (for example, version and patch level) or in major ways (for example, completely different OSes like Windows and Linux);

- The guest OS is not aware it is being virtualized and requires no modification. Full virtualization is the only option that requires no hardware assist or operating system assist to virtualize sensitive and privileged instructions. The hypervisor translates all operating system instructions on the fly and caches the results for future use, while user level instructions run unmodified at native speed;

- The VMM provides a standardized hardware environment that the guest OS resides on and interacts with. Because the guest OS and the VMM form a consistent package, that package can be migrated from one machine to another, even though the physical machines the packages run upon may differ;

- Full virtualization offers the best isolation and security for virtual machines, and simplifies migration and portability as the same guest OS instance can run virtualized or on native hardware.

Full Virtualization Limitations

- The virtualization software hurts performance, which is to say that applications often run somewhat slower on virtualized systems than if they were run on unvirtualized systems. The hypervisor needs data processing, which means that part of the computing power of a physical server and related resources should be reserved for the hypervisor program. While the VMMs appears to solve the entire problem with regard to virtualized machines, it does bring in some level of performance degradation, caused by the extra processing (in terms of the ‘instruction translation’) that the hypervisor has to do. This can have a negative impact on overall server performance and slow down the applications;

- The hypervisor must contain the interfaces to the resources of the machine; these interfaces are referred to as device drivers. Because hardware emulation uses software to trick the guest OS into communicating with simulated non-existent hardware, this approach has created some driver compatibility problems. The issue is that the hypervisor contains the device drivers and it might be difficult for new device drivers to be installed by users (unlike on your typical PC). Consequently, if a machine has hardware resources the hypervisor has no driver for, the virtualization software can’t be run on that machine. This can cause problems, especially for organizations that want to take advantage of new hardware developments.