System

A system is composed of a collection of interacting components. A component may itself be a system, or it may be just a singular component. Components are the result of system decomposition chiefly motivated to aid in the partitioning of complex systems for either technical, or very often, for organizational or business reasons. Decomposition of systems into components is a recursive exercise. All components are typically delineated by the careful specification of their inputs and outputs and a component that is not decomposed further is called an atomic component.

Service

A system provides one or more services to its consumers. A service is the output of a system that meets the specification for which the system was devised, or which agrees with what system users has perceived the correct values to be.

Failure

A failure in a system occurs when the consumer (human or non-human) of a service is affected by the fact that the system has not delivered the expected service. Failures are incorrect results with respect to a specification or unexpected behavior perceived by the consumer or user of a service. The cause of a failure is said to be a fault.

Mean time to failure (MTTF)

Hardware reliability can be predicted by statistically analyzing historical data. The longer a component operates, the more it is likely to fail due to aging. The mean time to failure of a component is just that: a statistical forecast to measure the average time between failures with the modeling assumption that the failed system is not repaired. The greater the MTTF of a component, the less likely it is to fail. You can use the MTTF of a component (if it is known) as useful information for establishing preventative maintenance procedures and its value for an overall system can be improved by carefully selecting your hardware and software.

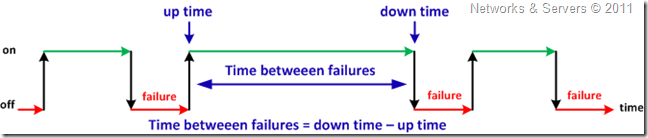

Mean time between failures (MTBF)

Mean time between failures (MTBF) is the predicted elapsed time between inherent failures of a system during operation and can be calculated as the arithmetic mean (average) time between failures of that system. The MTBF is typically part of a model that assumes the failed system is immediately repaired (zero elapsed time), as a part of a renewal process.

Fault

Faults are identified or detected in some manner either by the system or by its users. Faults are active when they produce an error but they may also be latent. A latent fault is one that has not yet been detected. Since faults, when activated, cause errors, it is important to detect not only active faults, but also the latent faults. Finding and removing these faults before they become active leads to less downtime and higher availability.

Error

An error is a discrepancy between the computed, measured, or observed value or condition and the correct or specified value or condition. Errors are often the result of exceptional conditions or unexpected interference. If an error occurs, then a failure of some form has occurred. Similarly, if a failure has occurred, then an error of some form has occurred. The difference between an error and a failure is very subtle, so it’s not unusual to treat the terms synonymously.

Timely Error Detection

If a component in your architecture fails, then fast detection is essential to recover from the unexpected failure. Monitoring the health of your environment requires reliable software to view it quickly and the ability to notify the system administrator of a problem. While you may be able to recover quickly from an outage, if it takes an additional 90 minutes to discover the problem, then you may not meet your service level agreement (SLA).

Availability

Availability is the degree to which an application, service, or function is accessible on demand and it is measured by the perception of an application's end user who will experience frustration when their data is unavailable or the computing system is not performing within certain expectations, and they do not understand or care to differentiate between the complex components of an overall solution.

Availability refers to the ability of the user community to access the system, whether to submit new work, update or alter existing work, or collect the results of previous work. If a user cannot access the system, it is said to be unavailable. Generally, the term downtime is used to refer to periods when a system is unavailable.

The dictionary defines the word available as follows:

- Present and ready for use; at hand, accessible;

- Capable of being gotten, obtainable;

- Qualified and willing to be of service or assistance.

When applied to computer systems, the word's meaning is a combination of all these factors. Thus, access to an application should be present and ready for use, capable of being accessed, and qualified and willing to be of service. In other words, an application should be available easily for use at any time and should perform at a level that is both acceptable and useful.

From the mathematical point of view, and in order to quantify it, availability can also be defined as the probability that an item (or network, etc.) is operational, and ready-to-go, at any point in time or, the expected fraction of time it is operational; annual uptime is the amount (in days, hrs., min.,etc.) the item is operational in a year.

In the previous formula MTTR stands for Mean Time To Recovery (or to Repair).

Continuous Operation

Providing the ability for continuous access to your data is essential when very little or no downtime is acceptable to perform maintenance activities. Activities, such as moving a table to another location in the database or even adding CPUs to your hardware, should be transparent to the end user in a high availability architecture. Continuous operation is the ability of a system to provide nonstop, uninterrupted service to its users.

Continuous Availability

Continuous availability is a special subset of high availability that combines it with continuous operation. The system must not have outages and service delivery must be ongoing, without interruptions.

This goal is very hard to achieve, as computer components – be they hardware or software – are neither error-free nor maintenance-free; therefore a system that needs continuous availability has to be fault tolerant. In addition, the need to realize maintenance activities in a running system is another differentiator between continuous availability and other categories of high availability. In such installations, one has to cope with installations that run multiple versions of the same software at the same time and get updated in phases.

Continuous availability concentrates on protection against failures, and discards user-visible recovery from failures as not acceptable. It is very rarely needed. In most situations, user sessions can be aborted and repeated without harm to the business or to people.

Fault tolerance

Fault tolerance is an attribute associated with a computing system that provides continuous service in the presence of faults. A fault-tolerant computer system or component is designed so that, in the event of component failure, a backup component or procedure can immediately take its place with no loss of service. The active ability of a system to circumvent or otherwise compensate for activated faults is varied among different fault tolerance techniques.

Fault tolerance is often available for hardware components, but very seldom for software. It means that a failure does not become visible to the user, but is covered by internal protection mechanisms.

Fault tolerance can be provided with software, embedded in hardware, or provided by some combination of the two. It goes one step further than High Availability to provide the highest possible availability within a single data center.

In the case of hardware, fault tolerance is often achieved through the use of redundant elements in the system. In the case of software, fault tolerance techniques generally are realized through design diversity. Multiple implementations of software provide the same purpose. The implementations are often constructed by different development teams, designing to the same specifications but with alternate implementations.

Fault Protection

Fault protection is the method used so that component failures do not lead to service outages at all providing continuous operation of a system in spite of those failure scenarios.

The application of best-known methods for design and development, the preference for simplicity over complexity, the refinement of user requirements, and the discipline for the execution of sound engineering practices are perhaps the best defense against faults being created in systems. Formal methods have been researched, but are primarily are still left to academia. Few formal methods are routinely practiced in the industry.

Only for a few components is fault protection available at reasonable rates. These are mostly hardware components, e.g., disk drives, power supplies, and I/O cards. This category is important nevertheless, as failures in those components happen often enough to make fault protection a standard solution. For other components, especially for software, fault protection is very expensive and is used only in designs for continuous availability.

Fault Removal

Fault removal during development is comprised of three steps: verification, diagnosis, and correction. Verification is the process of checking if the system is fulfilling its intended function. If it does not fulfill the intended function, diagnosis and subsequent correction is required to remove the fault and failure condition. Verification techniques vary but generally may be characterized as being either static or dynamic.

Fault and Failure Forecasting

The ability to manage reliability futures is an instrumental part of the complete lifecycle of system availability. Understanding the operational environment, gathering field failure data, the use of reliability models and the analysis and interpretation of these results are all significant and important to successfully manage availability. Fault and failure forecasting includes the understanding of a related term called reliability growth.

Disaster Recovery

Disaster recovery (DR) is the ability to resume operations after a disaster -- including destruction of an entire data center site and everything in it. In a typical DR scenario, significant time elapses before a data center can resume IT functions, and some amount of data typically needs to be re-entered to bring the system data back up to date.

Disaster Tolerance

The term Disaster Tolerance (DT) is the art and science of preparing for disaster so that a business is able to continue operation after a disaster. The term is sometimes used incorrectly in the industry, particularly by vendors who can't really achieve it. Disaster tolerance is much more difficult to achieve than DR, as it involves designing systems that enable a business to continue in the face of a disaster, without the end users or customers noticing any adverse effects. The ideal DT solution would result in no downtime and no lost data, even during a disaster. Such solutions do exist, but they cost more than solutions that have some amount of downtime or data loss associated with a disaster.

When availability is everything for a business, extremely high levels of disaster tolerance must allow the business to continue in the face of a disaster, without the end users or customers noticing any adverse consequences. The effects of global businesses across time zones spanning 24×7×forever operations, e-commerce, and the risks associated with the modern world drive businesses to achieve a level of disaster tolerance capable of ensuring continuous survival and profitability.

No comments:

Post a Comment