Planned outage/downtime

Planned outages include maintenance, offline backups and upgrades. These can often be scheduled outside periods when high availability is required.

Unplanned outage/downtime

While planned outages are a necessary evil, an unplanned outage can be a nightmare for a business. Depending on the business in question and the duration of the downtime, an unplanned outage can result in such overwhelming losses that the business is forced to close. Regardless of the nature, outages are something that businesses usually do not tolerate. There is always pressure on IT to eliminate unplanned downtime totally and drastically reduce, if not eliminate, planned downtime.

Note that an application or computer system does not have to be totally down for an outage to occur. It is possible that the performance of an application degrades to such a degree that it is unusable. As far as the business or end user is concerned, this application is down, although it is available.

Unplanned Shutdowns have various causes. The main reasons can be categorized into:

- Hardware Failures – Failure of main systems components such as CPUs and memory; or peripherals such as disks, disk controllers, network cards; or auxiliary equipment such as power modules and fans; or network equipment such as switches, hubs, cables, etc., can be the causes of hardware failures.

- Software Failures – The possibilities of failure of software mostly depends upon the type of software used. One of the main causes for software failure is applying a patch. Sometimes, if a patch does not match the type of implementation, then the application software may start to behave in a strange way, bringing down the application and reversing the changes, if possible. Sometimes, an upgrade may also cause a problem. The main problem with upgrades will be performance related or the misbehaving of any third party products, which depend upon those upgrades.

- Human Errors – An accidental action of any user can cause a major failure on the system. Deleting a necessary file, dropping the data or a table, updating the database with a wrong value, etc., are a few examples

Redundancy

Redundancy is used to eliminate the need for human intervention. The two kinds of redundancy are passive redundancy and active redundancy.

- Passive redundancy is used to achieve high availability by including enough excess capacity in the design to accommodate a performance decline. The simplest example is a boat with two separate engines driving two separate propellers. Malfunction of single components is not considered to be a failure unless the resulting performance decline exceeds the specification limits for the entire system.

- Active redundancy is used in complex systems to achieve high availability with no performance decline. Multiple items of the same kind are incorporated into a design that includes a method to detect failure and automatically reconfigure the system to bypass failed items using a voting scheme. This is used with complex computing systems that are linked. Active redundancy may introduce more complex failure modes into a system, such as continuous system reconfiguration due to faulty voting logic.

Reliability

Reliability is a measurement of fault avoidance. It is the probability that a system is still working at time t+1 when it worked at time t. A similar definition is the probability that a system will be available over a time interval T.

Reliable hardware is one component of a high availability solution. Reliable software—including the database, Web servers, and applications—is just as critical to implementing a highly available solution. A related characteristic is resilience. For example, low-cost commodity hardware, combined with good software, can be used to implement a very reliable system, because the resilience good software allows processing to continue even though individual servers may fail.

Reliability does not measure planned or unplanned downtimes; MTTR values do not influence reliability, this is often expressed as the MTBF.

Reliability features help to prevent and detect failures. The latter is very important, even if it is often ignored; the worst behavior of a system is to continue after a failure and create wrong results or corrupt data!

Similarly to what we did concerning availability, looking at reliability from the mathematical point of view, one can say that reliability is the probability of survival (or no failure) for a stated length of time or, the fraction of units that will not fail in the stated length of time. Annual reliability is the probability of survival for one year.

Availability vs. Reliability

- An airplane needs to be reliable; It doesn’t need to available to fly 24 hours a day; However, it must be reliable when the user determines it is ready to be available

- Reliability implies that the system performs its specified task correctly;

- Availability means that the system is ready for immediate use from 9 to 5, 24x7, etc;

- Today’s networks need to be available 24 hours a day, 7 days a week, 365 days a year; They need high Availability.

Measured Availability is the actual outcome produced by physically measuring over time the engineered system.

Reliability growth

Reliability growth is defined as the continued improvement of reliability in systems. It is generally measured and achieved by the successive increase in intervals between failures.

Recoverability

Because there may be many choices for recovering from a failure, it is important to determine what types of failures may occur in your high availability environment and how to recover from those failures in a timely manner that meets your business requirements. For example, if a critical table is accidentally deleted from the database, what action should you take to recover it? Does your architecture provide the ability to recover in the time specified in a service level agreement (SLA)?

Mean Time To Recovery (MTTR)

This is also a statistical measurement that indicates the average time it takes to recover from a certain failure. The lower the MTTR, the better. Self-healing systems may recover from a software error within seconds. A disaster such as an earthquake or a fire might take your systems down permanently. Having a disaster recovery plan is essential to handle this type of catastrophic event. Maintaining a pool of spare parts on site and taking frequent full backups will decrease the MTTR.

Recovery Time Objective

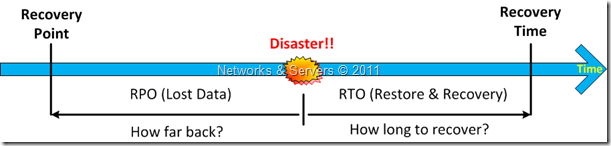

The Recovery Time Objective (RTO) is the time period after a disaster at which business functions need to be restored in order to avoid unacceptable consequences associated with a break in business continuity, i.e. it is the time that elapses between the loss of access to data and the recovery of data access. In other words is the maximum tolerable length of time that a computer, system, network, or application can be down after a failure or disaster occurs.

Different business functions may have different recovery time objectives. For example, the recovery time objective for the payroll function may be two weeks, whereas the recovery time objective for sales order processing may be two days.

The RTO (sometimes also referred as Maximum Allowable Downtime) is a function of the extent to which the interruption disrupts normal operations and the amount of revenue lost per unit time as a result of the disaster. The RTO is measured in seconds, minutes, hours, or days, including the time for trying to fix the problem without a recovery, the recovery itself, tests and the communication to the users and is an important consideration in Disaster Recovery planning. The shorter the RTO, the better.

Recovery Point Objective

The Recovery Point Objective (RPO) in a way expresses how much data a company can afford to lose following a disaster in sense that is the point in time when the data backup took place. Hence, it is generally a definition of what an organization determines is an "acceptable loss" in a disaster situation.

The RPO is the age of files that must be recovered from backup storage for normal operations to resume if a computer, system, or network goes down as a result of a hardware, program, or communications failure. The RPO is expressed backward in time (that is, into the past) from the instant at which the failure occurs, and can be specified in seconds, minutes, hours, or days. It is also a very important consideration in Disaster Recovery planning.

Recovery Access Objective

The Recovery Access Objective (RAO) is a subcomponent of RTO that measures the time it takes for the network to re-establish connectivity of users, customers, and partners with the applications at the alternate site once the primary site has been disrupted It identifies the point in time at which the users that were connected to applications and services running on one data center have access to the same applications and services running at an alternate data center. The RAO goal will typically be lower than the RTO for any specific application, so that a recovered application is not waiting on network access in order to resume providing services to users.

Network Recovery Objective

Very similar to the RAO, the Network Recovery Objective (NRO) indicates the time required to recover network operations. Systems level recovery is not fully complete if customers cannot access the application services via network connections. Hence, the NRO includes the time required to bring online alternate communication links, re-configure routers and name servers (DNS) and alter client system parameters for alternative TCP/IP addresses. Comprehensive network failover planning is of equal importance to data recovery in a Disaster Recovery scenario.

Maximum Tolerable Downtime

The Maximum Tolerable Downtime (MTD) is the time after which the process being unavailable creates irreversible (and often fatal) consequences.The maximum length of time a business function can be discontinued without causing irreparable harm to the business. Depending on the process, you can express the MTD in hours, days, or longer. Business functions associated with customer service and billing often have the shortest maximum tolerable downtimes. A similar and more standard term for this is the Maximum Tolerable Period of Disruption (MTPOD).

Work Recovery Time

The Work Recovery Time (WRT) is the remainder of the MTD used to restore all business operations. Usually, the RTO is used to take care of the infrastructure while the WRT is used make sure recover data, test and check processes.

Serviceability

Serviceability is a measurement that expresses how easily a system is serviced or repaired. For example, a system with modular, hot-swappable components would have a good level of serviceability. It can be expressed as the inverse amount of maintenance time and number of crashes over the complete life span of a system. For example, 1.5-h service in 720-h elapsed time (720 h is roughly 1 month). As such, it formulates how much sustainment work must be put into a system and how long one needs to get it up when it has crashed. Like availability, there are two measurements that are of interest: planned and actual serviceability.

Cluster

A cluster is a collection of two or more machines called nodes connected together to appear as one. All the nodes in the cluster share ”Common Disks” called ”Shared Disks,” which consist of database and application in addition to the local disks of their own. The local disks contain OS and other data critical to them. They also consist of their own resources such as CPUs, memory and disk controllers, etc.

This is the typical situation but let's see what are the three main types of clusters:

- Failover Clusters are implemented by having redundant nodes, which are then used to provide services when a component, or one of the nodes, fails.

- Network Load Balancing cluster is when multiple computers are linked together to respond as a single virtual computer to a high number of network requests. This results in balanced computational work among different machines, improving the performance of the cluster systems.

- Compute clusters are used primarily for computational purposes, rather than handling IO-oriented operations such as web service or databases. Multiple computers are linked together to share computational workload or function as a single virtual computer

2 comments:

Must say that overall I am really impressed with this blog.It is easy to see that you are passionate about your writing.If only I had your writing ability I look forward to more updates and will be returning.

server disaster recovery plan

Thanks for sharing innovative information. Nice blog. If you want to Technology Write For Us.. Than visit my website Technology Write For Us

Post a Comment