Cluster Node Configurations

The most common size for an high availability cluster is a two-node cluster, since that's the minimum required to assure redundancy, but many clusters consist of many more, sometimes dozens of nodes and such configurations can be categorized into one of the following models:

Active/Passive Cluster

In an Active/Passive (or asymmetric) configuration, applications run on a primary, or master, server. A dedicated redundant server is present to take over on any failure but apart from that it is not configured to perform any other functions. Thus, at any time, one of the nodes is active and the other is passive. This configuration provides a fully redundant instance of each node, which is only brought online when its associated primary node fails.

The active/passive cluster generally contains two identical nodes. Database applications single instances are installed on both nodes, but the database is located on shared storage. During normal operation, the database instance runs only on the active node. In the event of a failure of the currently active primary system, clustering software will transfer control of the disk subsystem to the secondary system. As part of the failover process, the database instance on the secondary node is started, thereby resuming the service.

This configuration is the simplest and most reliable but typically requires the most extra hardware.

Active/Active Cluster

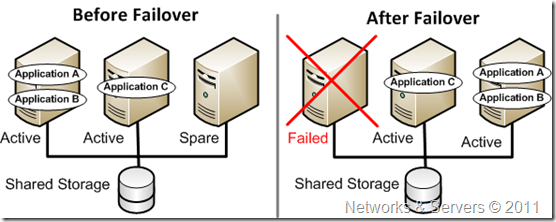

In this configuration (sometimes referred to as symmetric), each server is configured to run a specific application or service and provide redundancy for its peer. In this example, each server runs one application service group and in the event of a failure, the surviving server hosts both application groups.

In such clusters, each node is capable of taking on the additional workload; the failed over services from the other node and traffic intended for the failed node are either passed onto an existing node or load balanced across the remaining nodes. This is usually only possible when the nodes utilize a homogeneous software configuration; different applications can run simultaneously on the same server without interfering with each other.

An active/active cluster has an identical hardware infrastructure to an active/passive one. However, database instances run concurrently on both servers and access the same database which is located on shared storage. The instances must communicate with each other to negotiate access to shared data in the database. In the event of a server failure, the remaining server can continue processing the workload and even failed sessions can be reconnected.

Symmetric configurations appear to be more efficient in terms of hardware utilization but that might not be the case in every implementation. In the asymmetric example, the redundant server requires only as much processing power as its peer and on failover, performance remains the same. However, there is a permanent waste of a standby server. In the symmetric example, the redundant server requires adequate processor power to run the existing application and the new application it takes over if the other server fails. The benefit of an active/active cluster over an active/passive cluster is that, during normal processing, the workload can be shared across both servers in the cluster with minimum processing power wastage.

N-to-1 Cluster

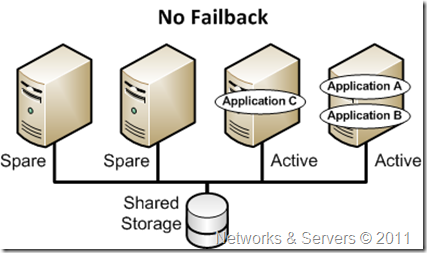

This configuration allows for the standby node to become the active one temporarily, until the original node can be restored and brought back online, at which point the services or instances must be failed-back to it in order to restore High Availability.

In a multinode cluster each standby or passive server can be the failover target for more than one of the active servers. For example, an enterprise with three mission-critical applications could run them on a four-node cluster with three active servers (A, B, and C), each of which fails to the same passive server (D). The passive server is usually idle; it only becomes active when another server in the cluster fails. N represents the number of active servers and 1 represents the standby or spare node.

This is an attractive option because, in the previous example with an active/passive architecture, six servers (three active, three passive) would be needed. That means three of the six servers are idle at any time—100% hardware overhead! With the four-node architecture, the overhead is just 33%.

An N-to-1 failover configuration reduces the cost of hardware redundancy and still provides a dedicated spare server. This configuration is based on the concept that multiple, simultaneous server failures are unlikely; therefore, a single redundant server can protect multiple active servers.

The problem with this design is the issue of failback. The dedicated spare and passive server has to return to its original state so that it can be available for any subsequent failovers from the other servers. In the N-to-1 model, once a failed server is brought back online, its workload must also be brought back online from the server to which it failed over.

In other words, the clustering arrangement must be restored back to the way it was, with the server specified as the dedicated spare always returning back to being the passive spare it was originally configured to be. For administrators, that’s just more work and more downtime and for the application and its users, it might be another outage to restore the cluster to the original configuration.

N+1 Cluster

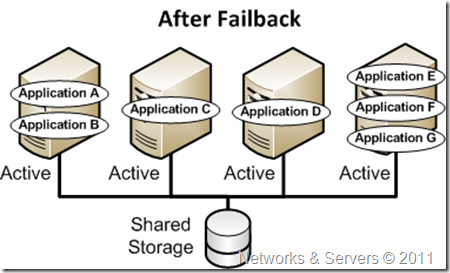

This configuration provides a single extra node that is brought online to take over the role of the node that has failed. In the case of heterogeneous software configuration on each primary node, the extra node must be universally capable of assuming any of the roles of the primary nodes it is responsible for. This normally refers to clusters which have multiple services running simultaneously; in the single service case, this configuration degenerates to Active/Passive.

In this configuration there is no longer the need for a dedicated, redundant server. In advanced N+1 configurations, an extra server in the cluster is spare capacity only. When a server fails, the application service group restarts on the spare but the failed server becomes itself the spare after it is repaired. This configuration eliminates the need for a second application failure to fail back the service group to the primary system. With this arrangement, any server in the cluster can act as the spare for any other server, and failed applications do not have to fail back to the server from which they came.

This allows for all the benefits of asymmetric clustering, with a fully available spare with no performance or interoperability concerns, and the cost savings of N-to-1, without any of its limitations. N+1 clustering is much more cost effective from a server perspective than the active/passive or even active/active configurations of the past, and does not have the downsides of the N-to-1 cluster described above.

N+M Cluster

In cases where a single cluster is managing many services, having only one dedicated failover node may not offer sufficient redundancy.

In such cases, more than one (M) standby servers are included and available.

The number of standby servers is a tradeoff between cost and reliability requirements.

N-to-N Cluster

This configuration is a combination of Active/Active and N+M clusters. N to N clusters redistribute the services or instances from the failed node among the remaining active nodes, thus eliminating (as with Active/Active) the need for a reserve node, but introducing a need for extra capacity on all active nodes.

N-to-N clustering is the most complex of the failover configurations, and is typically used in a highly available architecture supporting multiple applications in a server consolidation environment. An N-to-N configuration refers to multiple service groups running on multiple servers, with each service group capable of being failed over to different servers in the cluster.

For example, imagine a 4-node cluster, with each node supporting three critical database instances. On failure of any node, each instance is started on a different node, ensuring that no single node becomes overloaded.

This configuration is a logical evolution of N + 1 as it provides cluster standby capacity instead of a standby server in the cluster.

3 comments:

Firstly, thanks for your sharing about Failover Clustering.

Can you explain for me somethings?

_With N-to-N, can we have more than 2 active node? How can determine where node is suitable to come for application E,F,G?

I really need document about failover cluster for webserver. Pls help me :(

I was very pleased to find this site. I wanted to thank you for this great read!! I definitely enjoying every little bit of it and I have you bookmarked to check out new stuff you post. raid 50 recovery

Post a Comment