Cluster Quorum Configurations

In simple terms, the quorum for a cluster is the number of elements that must be online for that cluster to continue running. Server clusters require a quorum resource to function and this, like any other resource, is a resource which can only be owned by one server at a time, and for which servers can negotiate for ownership. In effect, each element can cast one “vote” to determine whether the cluster continues running. The voting elements are nodes or, in some cases, a disk witness or file share witness. The quorum resource is used to store the definitive copy of the cluster configuration so that regardless of any sequence of failures, the cluster configuration will always remain consistent. Each voting element (with the exception of a file share witness) contains a copy of the cluster configuration, and the Cluster service works to keep all copies synchronized at all times.

When network problems occur, they can interfere with communication between cluster nodes. A small set of nodes might be able to communicate together across a functioning part of a network but not be able to communicate with a different set of nodes in another part of the network. This can cause serious issues. In this "split" situation, at least one of the sets of nodes must stop running as a cluster.

Negotiating for the quorum resource allows Server clusters to avoid "split-brain" situations where the servers are active and think the other servers are down.

To prevent the issues that are caused by a split in the cluster, the cluster software requires that any set of nodes running as a cluster must use a voting algorithm to determine whether, at a given time, that set has quorum. Because a given cluster has a specific set of nodes and a specific quorum configuration, the cluster will know how many "votes" constitutes a majority (that is, a quorum). If the number drops below the majority, the cluster stops running. Nodes will still listen for the presence of other nodes, in case another node appears again on the network, but the nodes will not begin to function as a cluster until the quorum exists again.

Note that the full function of a cluster depends not just on quorum, but on the capacity of each node to support the services and applications that failover to that node. For example, a cluster that has five nodes could still have quorum after two nodes fail, but each remaining cluster node would continue serving clients only if it had enough capacity to support the services and applications that failed over to it.

Node Majority

This is the easiest quorum type to understand and is recommended for clusters with an odd number of nodes (3-nodes, 5-nodes, etc.). The Node Majority mode assigns votes only to the cluster nodes; every node has 1 vote, so there is an odd number of total votes in the cluster. This means that the cluster can sustain failures of half the nodes (rounding up) minus one.

If there is a partition between two subsets of nodes, each node that is available and in communication can vote and the subset with more than half the nodes will maintain quorum.

For example, if a 5-node cluster partitions into a 3-node subset and a 2-node subset, the 3-node subset will stay online and the 2-node subset will offline until it can reconnect with the other 3 nodes.

Node & Disk Majority

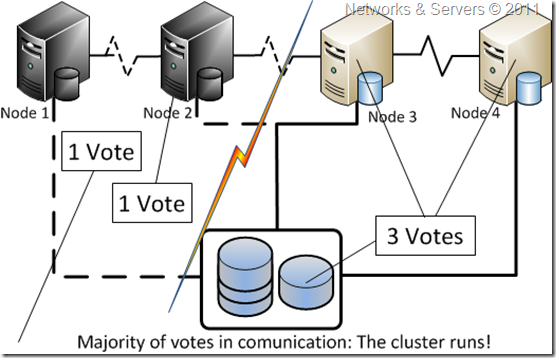

This quorum configuration is the most commonly used since it works well with 2-node and 4-node clusters which are the most common deployments. This mode is preferable in situations with an even number of nodes that have shared storage available because in the Node and Disk Majority mode, each node has a vote as well as a shared disk called the disk witness (also referred to as the ‘quorum disk’). Since there are an even number of nodes and 1 addition Disk Witness vote, in total there will be an odd number of votes. The cluster functions only with a majority of the votes, that is, more than half.

This Disk Witness is simply a small clustered disk which is in the Cluster Available Storage group thus it is highly-available and can failover between nodes. It is considered part of the Cluster Core Resources group; however it is generally hidden from view in Failover Cluster Manager since it does not need to be interacted with.

This mode can sustain failures of half the nodes (rounding up) if the disk witness remains online or failures of half the nodes (rounding up) minus one if the disk witness goes offline or fails.

If there is a partition between two subsets of nodes, the subset with more than half the votes will maintain quorum. For example, if you have four nodes that become equally partitioned then only one of the partitions can own the disk witness, which gives that partition an additional vote. The partition that owns the disk witness can therefore make quorum and offer services.

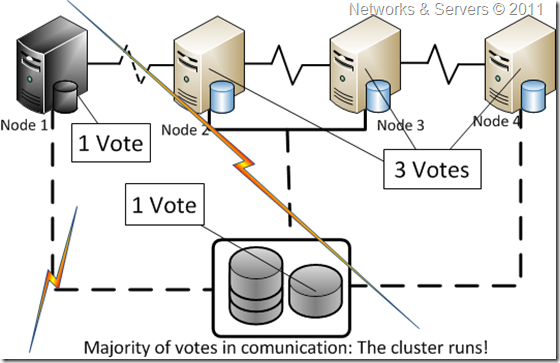

Node & File Share Majority

This mode works in exact same way as the Node and Disk Majority mode with the exception that the disk witness is replaced with a file share that all nodes in the cluster can access instead of a disk in cluster storage. This file share is called the File Share Witness (FSW) and is simply a file share on any server in the same AD Forest which all the cluster nodes have access to. One node in the cluster will place a lock on the file share to consider it the ‘owner’ of that file share, and another node will grab the lock if the original owning node fails. In this configuration every node gets 1 vote, and additionally 1 the remote file share gets 1 vote.

This mode is recommended if you have an even number of nodes and you don’t have shared storage available. On a standalone server, the file share by itself is not highly-available, however it can be created on a clustered file share on an independent cluster, making the FSW clustered and giving it the ability to failover between nodes. It is important not to put this vote neither on a node nor within a VM on the same cluster, because losing that single node would cause the loss of the FSW vote, causing two votes to be lost on a single failure. A single file server can host multiple FSWs for multiple clusters.The file share witness can vote, but does not contain a replica of the cluster configuration database neither does it contain information about which version of the cluster configuration database is the most recent.

This quorum configuration is usually used in multi-site clusters. Generally multi-site clusters have two sites with an equal number of nodes at each site, giving an even number of nodes. By adding this additional vote at a 3rd site, there is an odd number of votes in the cluster, at very little expense compared to deploying a 3rd site with an active cluster node and writable DC. This means that either site or the FSW can be lost and the cluster can still maintain quorum. For example, in a multi-site cluster with 2 nodes at Site1, 2 nodes at Site2 and a FSW at Site3, there are 5 total votes.

If there is a partition between the sites, one of the nodes at a site will own the lock to the FSW, so that site will have 3 total votes and will stay online. The 2-node site will offline until it can reconnect with the other 3 voters.

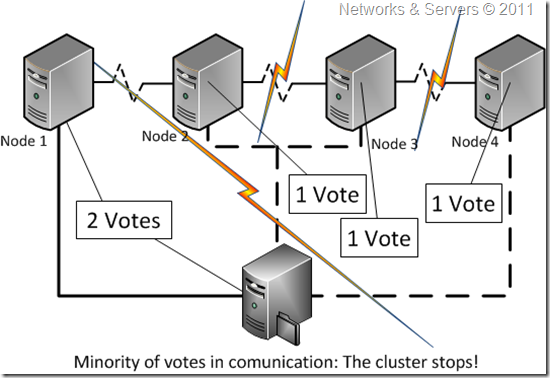

No Majority: Disk Only

The Disk Only quorum type was available in Windows Server 2003 and has been maintained for compatibility reasons. The cluster has quorum with a single node available as long as this node is in communication with a specific disk in the cluster storage and in this situation only the nodes that are in communication with that disk can join the cluster.

In a cluster with the Disk Only configuration, the number of nodes does not affect how quorum is achieved; the disk is the quorum. However, if communication with the disk is lost, the cluster becomes unavailable thus this kind of cluster can sustain failures of all nodes except one (if the disk is online). However, this configuration is not recommended because the disk might be a single point of failure and it is important to consider whether that last remaining node can even handle the capacity of all the workloads that have moved to it from other nodes.

The illustrations show how a cluster that uses the disk as the only determiner of quorum can run even if only one node is available and in communication with the quorum disk.

They also show how the cluster cannot run if the quorum disk is not available (single point of failure). For this cluster, which has an odd number of nodes, Node Majority is the recommended quorum mode.

Choosing the quorum mode for a particular cluster

Description of cluster

|

Quorum recommendation

|

| Odd number of nodes | Node Majority |

| Even number of nodes (but not a multi-site cluster) | Node and Disk Majority |

| Even number of nodes, multi-site cluster | Node and File Share Majority |

| Even number of nodes, no shared storage | Node and File Share Majority |

| Exchange CCR cluster (two nodes) | Node and File Share Majority |

A “multi-site” cluster is a cluster in which an investment has been made to place sets of nodes and storage in physically separate locations, providing a disaster recovery solution. An “Exchange Cluster Continuous Replication (CCR)” cluster is a failover cluster that includes Exchange Server 2007 with Cluster Continuous Replication, a high availability feature that combines asynchronous log shipping and replay technology.

1 comment:

Cluster quorum configuration is a vital aspect of ensuring high availability in a failover cluster. It determines the minimum number of nodes required for the cluster to maintain its integrity and continue functioning during failures. Here's a breakdown of the different configurations and their considerations:

1. Node Majority Quorum:

Networking Projects For Final Year

Network Security Projects For Final Year Students

Information Security Projects For Final Year Students

Description: This is the simplest and most common configuration. Each node in the cluster has one vote. The cluster maintains a quorum as long as a majority of the nodes (more than half) are online and communicating.

Benefits:

Easy to understand and implement.

Suitable for clusters with an odd number of nodes (3, 5, 7, etc.).

Drawbacks:

Vulnerable to "split-brain" scenarios where the cluster partitions into two isolated sections with an equal number of nodes. Neither side will have a majority and the cluster becomes unavailable.

Post a Comment